-

Цвета ячеек в таблицах

Эта заметка для тех, кто работает в Экселе и Гугло-таблицах. Прочтите обязательно.

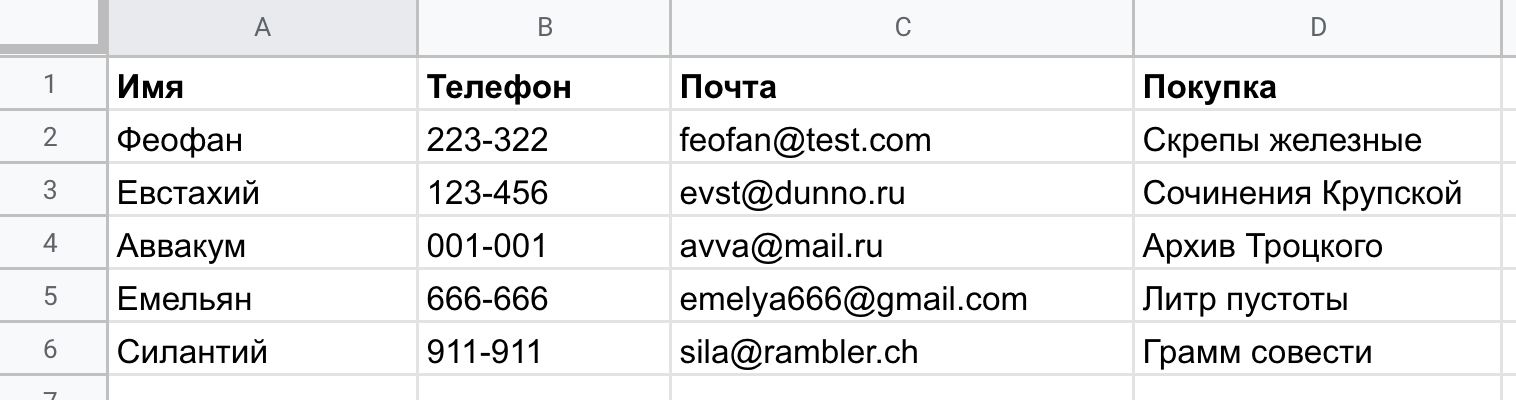

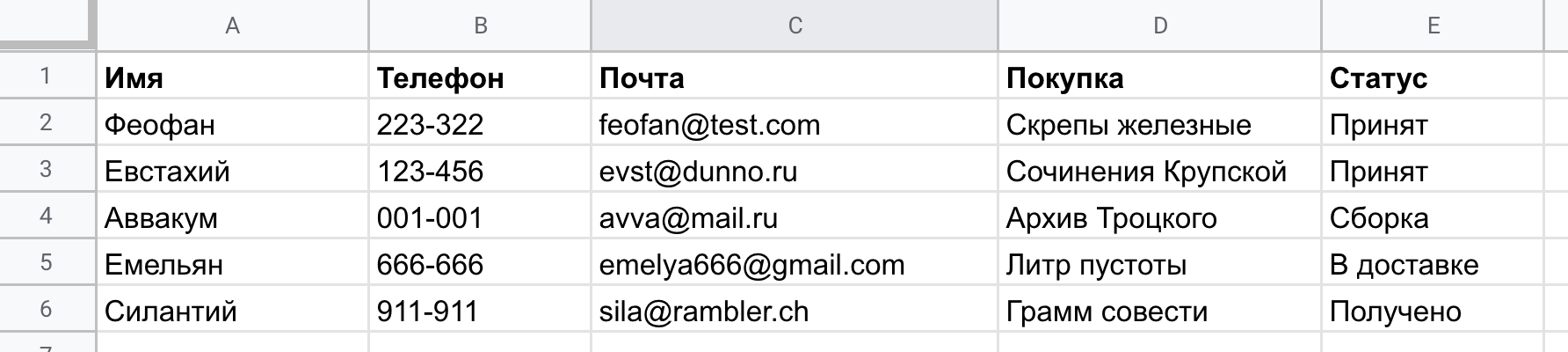

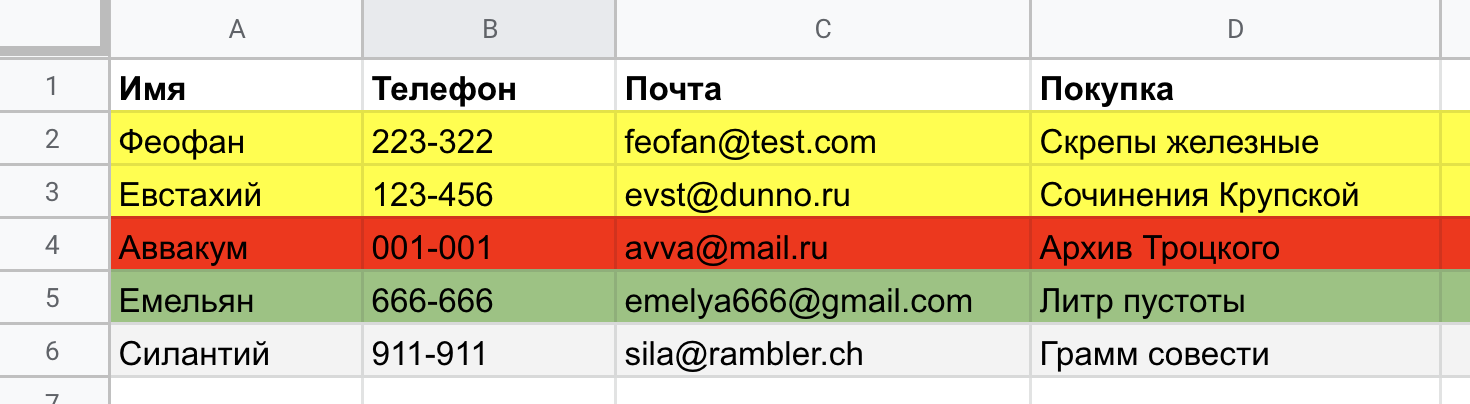

Итак, вы вбили в табличку данные, например сведения о заказах в вашем магазине. Выглядит прекрасно:

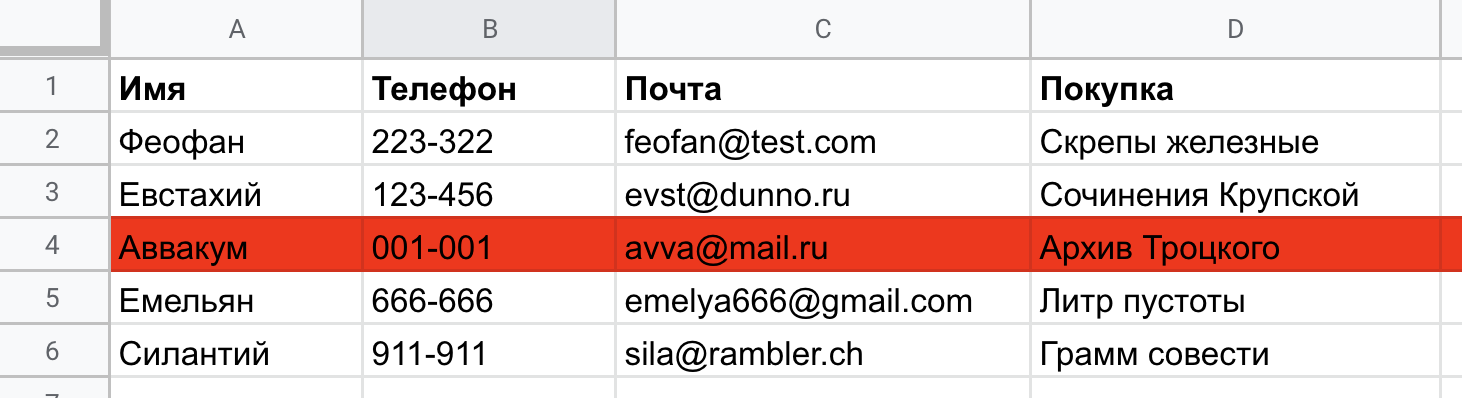

Затем данные поступают в работу. Их нужно обновлять: помечать просроченные заказы, ошибки логистики, фиксировать звонки. Проще всего это сделать цветом. Например, красным пометим тех, у кого сбой в доставке. Красный, потому что это серьезно:

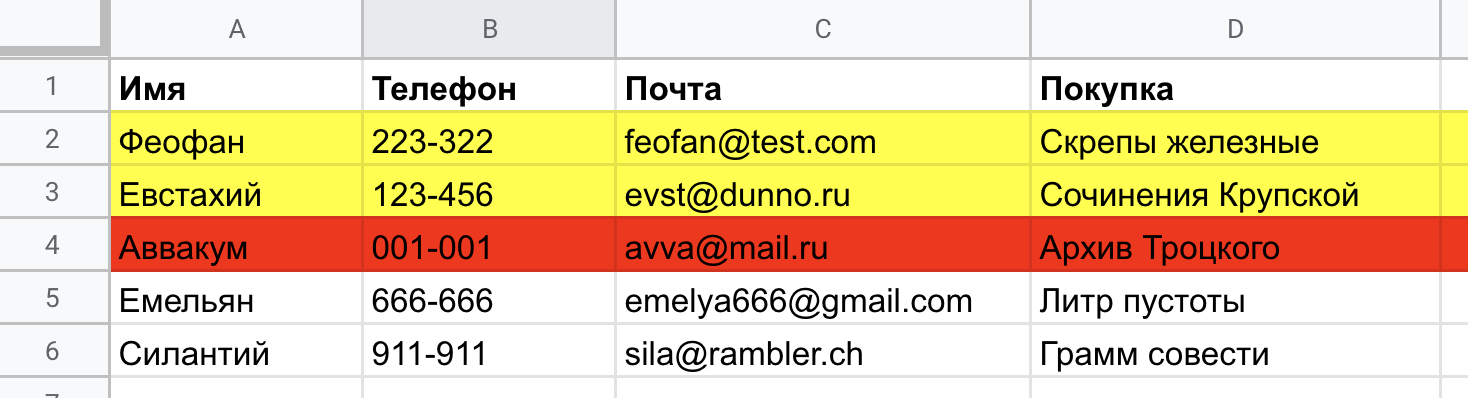

Выделим желтым тех, кому не удалось дозвониться:

Зеленым — тех, кто уже получил заказ, потому что зеленый цвет позитива. Один заказ еще в сборке, поэтому волноваться нечего. Но все же пометим его серым, нейтральным цветом. Вот что получилось:

Я думаю, понятно, что так делать не надо. Знаю, все так делают, и я сам до недавнего времени тоже. Но это не повод продолжать плохую практику. Объясню, в чем именно проблема и как вести табличку правильно.

-

Логику цвета нужно угадывать. Мысль о том, что желтый — внимание, а красный — опасность, основана ни на чем. Критический заказ вполне может быть оранжевым, а ошибка доставки — синим (случай из практики). Если вы добавили цвет, приходится объяснять, что он значит. Эта семантика тупо теряется.

-

Рано или поздно данные нужно экспортировать в CSV для программной обработки. Поздравляю — все ваши цвета пойдут по одному месту. В CSV нет понятия цвета. Вам придется создать колонки, которые объясняют цвет.

-

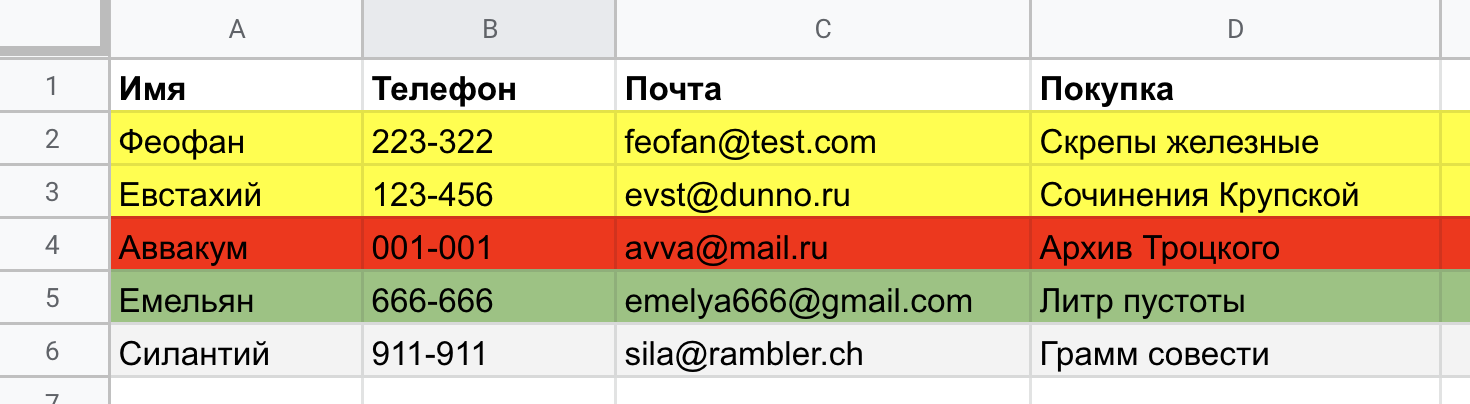

Из красивой таблицы получился фейерверк, на который невозможно смотреть. Нельзя выделить все — только что-то малое на общем фоне. Если выделено все, то на самом деле не выделено ничего — мозг отказывается это воспринимать.

-

Личный фактор. Меня бесит, когда в таблицу приходит кто-то левый и начинает красить. Крась у себя. Перед тем, как что-то отмечать цветом, хотя бы спроси, как лучше поступить. Цвет – последнее, что я посоветую.

Вот что надо сделать, чтобы избежать цветового хаоса.

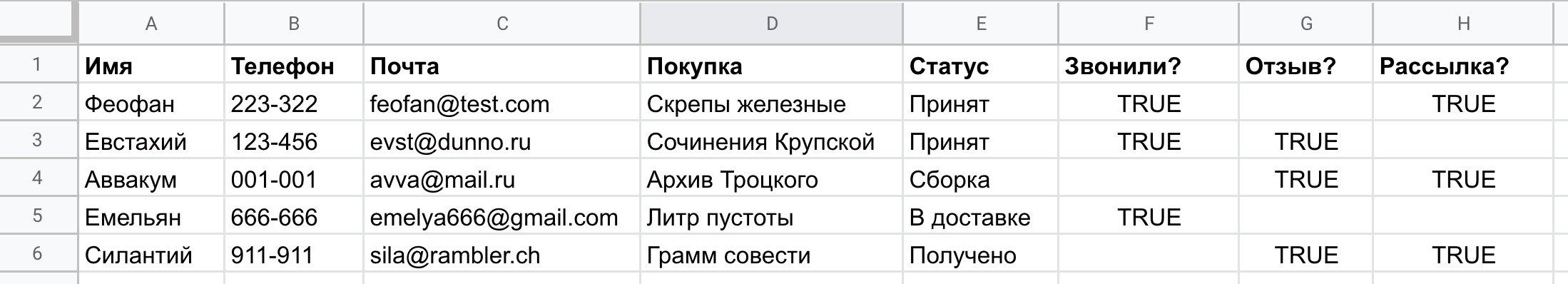

- Завести отдельное поле “статус” или “состояние”. В нем писать простое емкое слово: сборка, доставка, получено, ошибка. Все таблицы поддерживают автодополнение по столбцу, поэтому достаточно написать одну букву и нажать ввод. Программист бы сказал, что это перечисление.

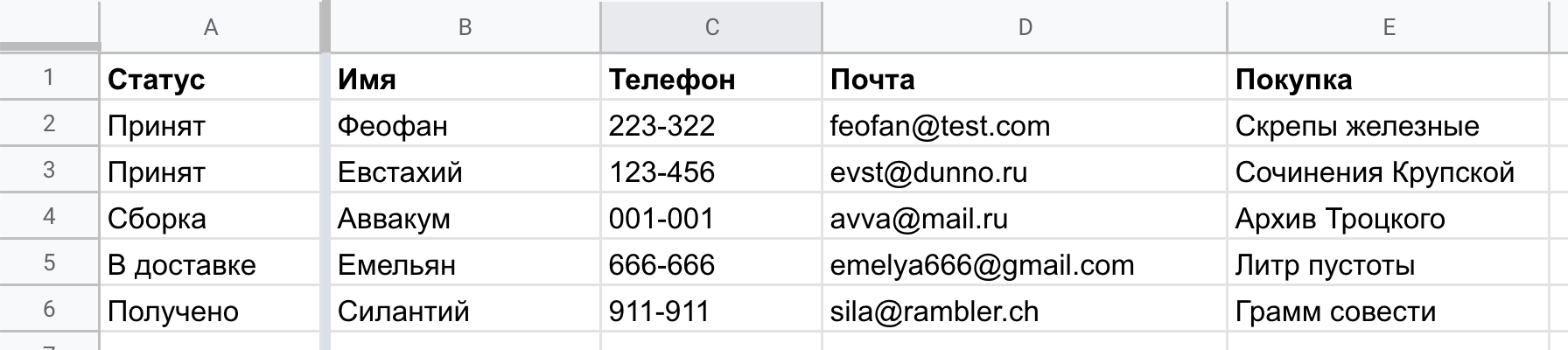

Мне возразят: цвет виден отовсюду, а колонка может уплыть. Если она так важна, перенесите ее в начало и закрепите.

- Кроме состояния, у заказа может быть много других отметок, которые нужно фиксировать. Например, факт звонка или отзыва клиента. Заводим логическое поле “звонили?” или “отзыв?”, в которые ставим TRUE. При необходимости добавим хоть сто таких полей.

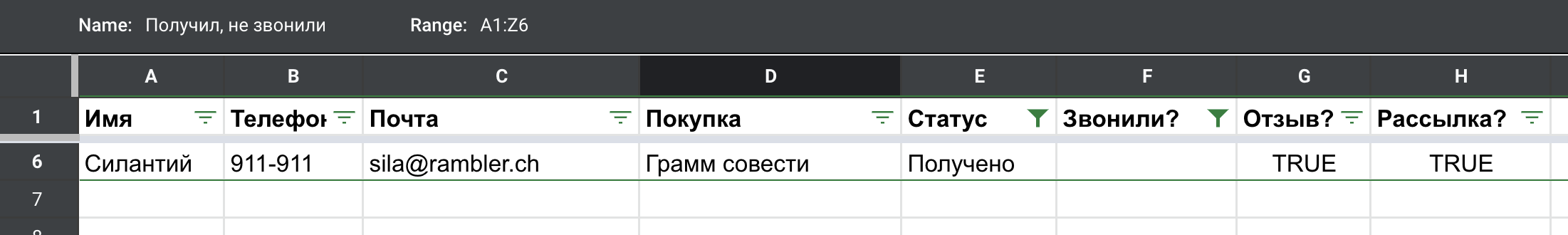

- Используйте фильтры! Выделяем шапку и нажимаем воронку. Выставляем отбор по любому полю, например те клиенты, кто получил заказ, но которым еще не позвонили. Фильтры можно сохранять с понятными именами. В Гугло-доке фильтры бывают глобальные и персональные, когда другие пользователи не замечают их в процессе работы.

Вспомним, от чего мы пришли:

Ужас, ужас.

Электронные таблицы — великая вещь. Они знакомы нам со школьной скамьи, но мы знаем только основы и считаем, что этого достаточно. Это не так — время от времени нужно пересматривать знания. Дурная привычка выделять цветом — тот самый случай.

В тему – заметка о подчёркивании в книгах.

-

-

Make makefiles

У меня две простые просьбы:

- пишите make-файлы;

- не приставайте к тем, кто их пишет.

Тогда все будет хорошо.

Теперь длинно. Когда мы садимся за проект, то запускаем в терминале команды. Сбилдить uberjar, образ докера, переколбасить какие-то файлы. Например:

-

Большой-большой проект

Часто слышу — Кложа не подходит для больших проектов. Даже цитируют Алекса Миллера из твиттера: “мы считаем Кложу прекрасным решением для небольших команд в быстро меняющихся условиях рынка”. Цитату пишу по памяти, видел когда-то давно.

Что ж, кому-то не подходит и ладно. Мне лично все подходит, и фирме, где я работаю, тоже. Всегда найдется тот, кому не подойдет — это не важно. На самом деле я хотел бы поговорить о “больших” проектах — что это такое и чего от них ожидать.

-

Поудалял

Месяца три назад озаботился порядком в цифровой среде. С ней то же самое, что с обычной уборкой: если ничего не далать, обрастешь хламом. Со временем теряешься, что где, каждый сервис требует внимания, словом — надо сжать булочки и почистить.

Прошелся по соцсетям, облачным хранилищам и пет-проектам. Получил обалденный кайф от того, что избавился от балласта. Мысль, что сервис или проект больше не потревожит тебя, перевешивает любое сожаление. Вот неполный список того, что пошло под нож и почему.

Фейсбук. Абсолютное зло, не поддается никакому осмыслению. Интерфейс из потустороннего мира, жутко тормозит. Компания погрязла в скандалах, связанных с торговлей данными, потеряла всякое лицо. Фейсбук возомнил себя модератором всея интернета, учит как думать и жить. Извините, нет.

ВКонтакте. Как и Фейсбук, эта сеть перегружена сущностями сверх меры. Группы, форумы, обсуждения — теряюсь, что и где. По факту у Вконтакта только одна нормальная функция — переписка, но ее вытеснил Телеграм. И еще дурацкое название с предлогом.

Одноклассники. Тут все понятно: зарегался, нашел одногруппников и собутыльников. Дальше только смотреть чужие фотки и отвечать где работаешь и сколько у тебя детей. Нужные люди давно переехали в Вайберы-Ватсаппы, так что сервис без надобности.

Инстаграм. Закинул туда штук десять фоток, но Инстаграм уже давно не про фотки, а своего рода Твиттер — треды, сторизы, локальные разборки… Не употребляю.

Кстати, удалить соцсети оказалось проще, чем я думал. Хотя сервисы прячут нужный пункт в дебрях настроек, с самим процессом проблем не возникает. Болтают, что десять лет назад в Одноклассники надо было отправить бумажное письмо(!) в Ригу(!!) с заверенной копией паспорта(!!!). Не знаю, правда ли, но сейчас такого беспредела нет. Кроме того, почти все соцсети позволяют уведомить друзей сообщением, где разумно указать почту или телефон.

Твиттер. Не я закрыл Твиттер, а он меня. Долгое время я парсил Твиттер с сотни подставных аккаунтов, и по запаре указал ключи с основной учетки. Забанили по самые помидоры: новый пользователь с моим именем удаляется через минуту. Не разбираясь, чуваки снесли учетку некоего Игоря Гришаева (хотел одно время выкупить у него ник igrishaev). Ни на какие письма и запросы Твиттер не ответил. Штош, прощайте.

Дропбокс. Про этот сервис я писал не раз (пост, еще пост). Вкратце — Дропбокс в свое время был революцией, но скатился в унылую массу ненужных фич. Ребята выпустили облачные документы, потом какие-то заметки, команды, менеджер паролей… Венцом стал дичайший ребрендинг, “нативное” приложение на 300 мегабайт и сто экранов установки. Забрал пару файлов, снес и закрыл учетку. Помню старый Дропбокс, скорблю.

Queryfeed. Удалил свой главный пет-проект. Занимался им аж с 2011 года – десять лет! Вкратце, сервис воровал данные из популярных соцсетей — в основном Твиттера и Инстаграмма. В работе над ним я испробовал сотни приемов и трюков, применял всякие выкрутасы. Покупал серые прокси, ходил в сеть через Тор, парсил HTML — всего и не вспомнишь. Отдельный факт – проект пережил не одну соцсеть! В свое время подключал Google Buzz и Google Plus. Оба появились и закрылись, а мой работал.

Сервис был платный с подпиской в Paypal. Клиентов было не очень много, но на хостинг хватало. В лучшие месяцы приходило долларов 250. Чтобы справится с нагрузкой, которая исходила от ботов и скриптов, сделал архитектуру на множество нод, чтобы в любой момент добавить новую. Ничем серьезным этот бизнес не стал, и со временем отвлекал от основной работы. Все-таки пасинг сторонних ресурсов — это паразитирование на чужом бизнесе. В общем, закрыл сервис, не стал даже спасать бекапы и прочее, просто грохнул все ноды. Ушла эпоха!

Paypal. Имел несколько Paypal-учеток для разных проектов. Оставил только один личный. Нареканий к сервису почти нет, просто работает. Раз в пару лет меняет интерфейс, и ладно.

Домены. За эти годы накопил достаточно доменов для “своих проектов”. Ни один из них не стал чем-то серьезным, и пора уже оставить надежду на успешный стартап. Даже не выставил на аукцион — просто удалил, и теперь домены доступны всем.

Что там еще? Левые учетки гугла. Хостинги, которыми уже не пользуюсь. Локальные файлы столетней давности. Как же классно все это удалять — неистово советую сделать то же самое. Старое освобождает путь для нового, и это просто замечательно.

-

Смысл

Смысл жизни — пожалуй, самый инфантильный вопрос, которым может задаться человек. В жизни особи не больше смысла, чем в существовании камня. Тем более, что сама постановка вопроса убога. При чем тут смысл? Почему не цель или причина? С этими словами в вопросе становится больше смысла (забавная игра слов).

Беда в том, что если открыто признаться, что не видишь в жизни смысла, тебе начнут помогать его найти. Учителя, церковь, патриоты и всякие деды-воевалы всегда наготове. А то и просто те, кто нашел свой смысл, но не терпит пустоты у других. Нельзя же без смысла!

Поэтому самое удобное поведение — притвориться, что видишь смысл и спокойно жить без всякого смысла.

-

Что там с книгой? Полгода спустя

Есть несколько новостей насчет книжки.

-

Издательство сообщает, что скоро ее можно будет купить на Алиэкспрессе. Отношусь к этому скептически, но все же новая площадка — почему нет? Вдруг у вас там бонусные баллы, купоны и прочие ништяки, которые давно пора потратить? Вот и повод. Или вы застряли в Китае, а книги под рукой нет, и вот пожалуйста. Как будет ссылка, сразу вам сообщу.

-

С ноября работаю над вторым изданием книги. В нем исправлено много ошибок, появились новые разделы вместо старых. Текст стал точнее, ушли смысловые неточности. Про второе издание будет отдельный пост.

- Сделал публичным репозиторий книги! Милости прошу: igrishaev/clj-book. Проект целиком на LaTeX, Docker и немного Кложи. Уточню, что я не спец в LaTeX, поэтому приму любую критику и советы. Инструкции по сборке допишу в readme чуть позже.

- Книга в процессе перевода на английский. Вместе с Евгением Бартовым мы работаем уже над седьмой главой. Впереди долгая шлифовка и адаптация правок из второго издания, но когда-нибудь английская книга выйдет. Меня уже завернули в The Pragmatic Programmer из-за проблем с правами, так что буду искать другой вариант.

Пока что все. Как видно, почти каждый пункт тянет на отдельный пост, буду освещать по ходу дела.

-

-

Фон на созвоне

Сейчас во всех программах для звонков есть возможность замылить фон. Полезная вещь. Некоторые звонят из домашней обстановки, и ничего не можешь с собой поделать: рассматриваешь хрустальную стенку, хлам в углу, корешки книг, зашедшую в комнату маму.

Не знаю как у других, а в Google Meet можно поменять фон на картинку и даже загрузить свою. Прикольная штука, коллеги часто этим пользуются. Но до чего же скудная фантазия у людей! Один ставит тропической остров, второй — серверную стойку. Что за убожество.

Фон должен быть не просто фоном, он должен вызывать какие-то эмоции, воспоминания. Говоря русским языком — МЭСАДЖ. За пять минут накидал фонов, которые могут найти отклик в собеседниках. Замечу, что фоны сохраняются, и дальше их можно переключать мышкой.

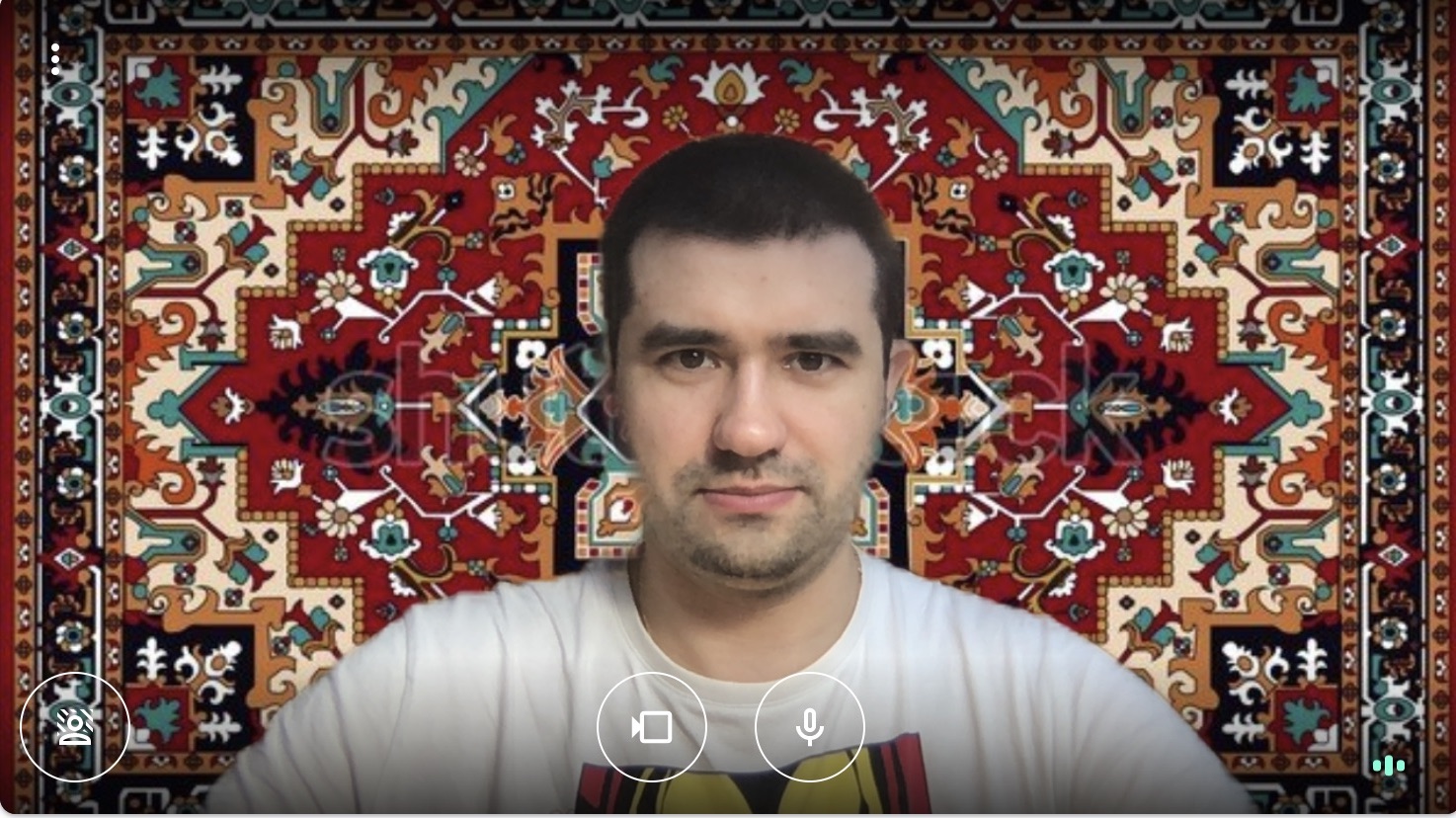

Божественный ковер:

-

Порты и прогресс

Сегодня утром потянулся к ноуту, чтобы вставить провод в USB Type-C. И только тогда заметил, что все четыре порта заняты.

По часовой стрелке:

- монитор (Type-C → Displayport, 144Hz);

- питание ноута;

- зарядка устройств (телефон, клавиатура, тачпад, наушники);

- токен доступа для работы.

Ноут с четырьмя портами у меня недавно, с осени. Хорошо помню, что как и многие другие, переживал из-за портов. Как подключать старые девайсы? А что с картами памяти? Как буду без HDMI? Таскать пачку переходников?

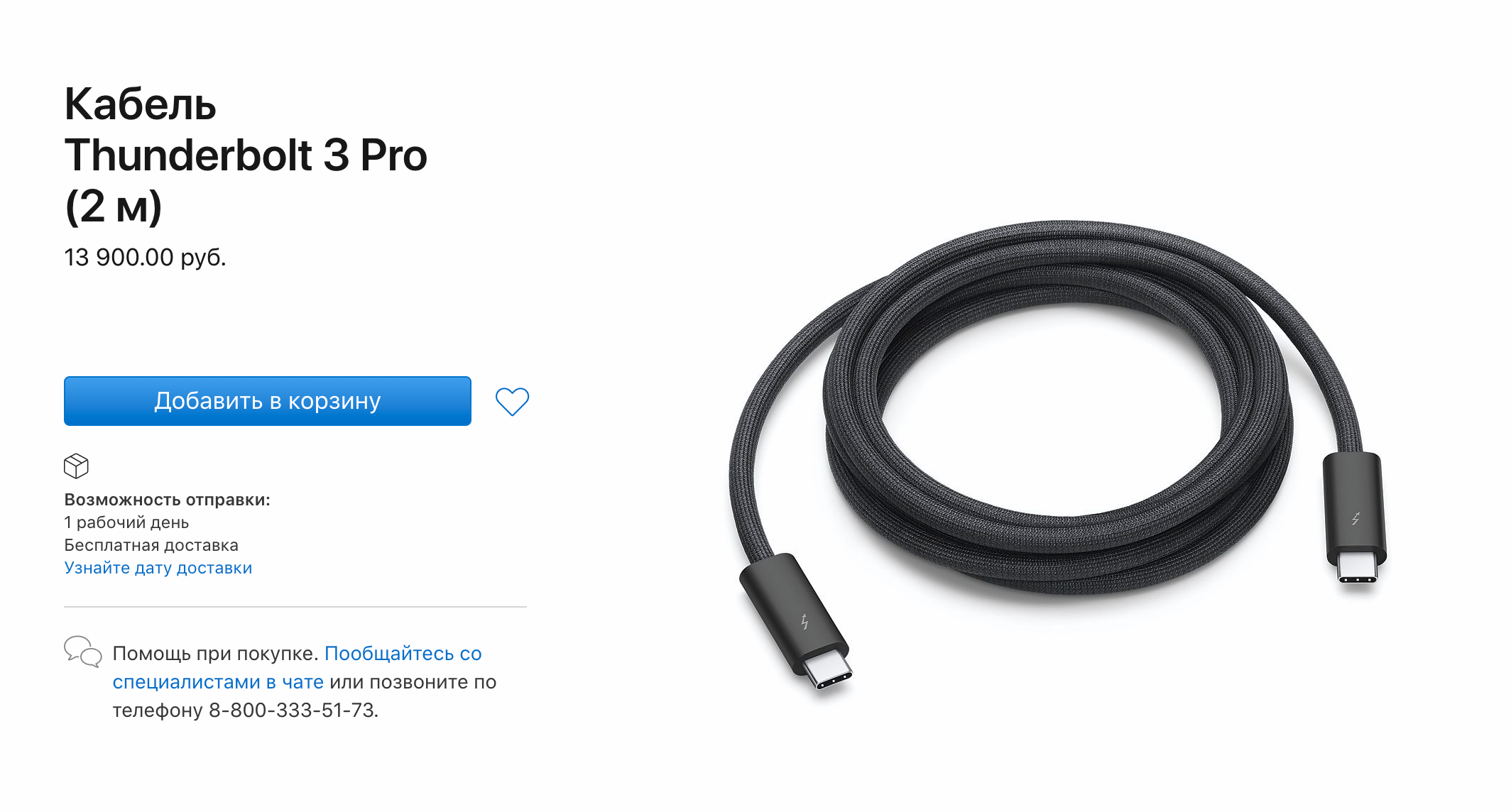

На первых порах пришлось кое-что докупить: взял фирменный блок c тремя портами (USB, HDMI и Type-C):

, а также китайский донгл с нового USB на простой:

Однако сейчас я ими уже не пользуюсь. Пихать чужие флешки небезопасно, а любой файл проще кинуть в мессенджерах. HDMI не поддерживает герцовку выше 60, и с недавних пор мне стало это важно (потом напишу отдельно). Карты памяти? Проще подключить андроид родственника по проводу, чем выковыривать карточку. Тем более, что китайцы ставят ее под батарею и в другие интересные места.

Словом, если бы мне кто-то сказал, что уже скоро все четыре порта Type-C будут заняты, я бы удивился.

В этот момент я понял, что такое прогресс. Как бы ни стонали обыватели, новый порт лучше и удобней. Он маленький, мощный, поддерживает все известные форматы. По нему передают видео, звук, данные, электричество, словом, огонь. Не удивительно, что два метра кабеля Thunderbolt стоят 14(!) косарей: по сути это провод из ста проводов.

Для экспериментов я купил короткую версию длиной 0.8 метра и доволен ей.

Удивляет скорее то, что хотя в ноутах Apple везде новые порты, на других устройствах все еще старый Lightning. Даже в последних айфонах. Приходится держать для зарядки провод Type-C → Lightning. Этому пора положить конец — везде ставить Type-C.

-

Почитать на выходных (выпуск 32)

Горячо советую прочесть эти две статьи:

-

Как писать статьи в IT-журналы и блоги

Интервью с редактором журнала “Хакер” Андреем Письменным. Очень глубокий, дружелюбно поданый материал. Допускаю, что как и я, вы читали “Хакер” сто лет назад на бумаге. Даже если так, все равно прочитайте: столь качественного интервью не попадалось уже давно.

-

In-depth: Functional programming in C++

Рассуждения на тему функционального программирования от лица Джона Кармака. Разумный, взвешенный взгляд, адекватные доводы и аргументы и все это — в разрезе практики. Все-таки Джон и команда пишут игры, а не решают задачки с волшебными шляпами. Даже если с английским у вас не ахти, прогоните через переводчик. Материал так хорош, что планирую сделать русскую версию.

-

-

Базы данных в Clojure (1)

В этой главе мы обсудим, как работать с реляционными базами данных из Clojure. Большую часть описания займет библиотека clojure.java.jdbc и ее надстройки. Вы узнаете, какие проблемы обычно сопровождают доступ к базам и как их решать в Clojure.

Реляционные БД

В разработке на бекенде базы данных занимают важное место. Говоря упрощенно, любая программа сводится к обработке данных. Конечно, данные могут поступать не только из баз, но и сети и файлов. Однако в целом доступ к информации регулируют базы данных — специальные программы, сложные, но с богатыми возможностями.

Базы данных, или сокращенно БД, бывают разных видов. Они различаются архитектурой, способом хранения информации, протоколом работы с клиентом. Некоторые базы работают только на клиенте, потому что не предлагают сетевой интерфейс. Другие хранят только текст и оставляют вывод типов на усмотрение клиента. В этой главе мы не ставим цель охватить как можно больше СУБД и способов для работы с ними. Наоборот, сфокусируем внимание на том, что вас ждет в реальном проекте. Скорей всего это будет классическая реляционная БД вроде PostgreSQL или MySQL. О них мы и будем говорить.

Writing on programming, education, books and negotiations.